Meta Description: Learn AI’s Role in Student Language Polishing, university policies on language polishing, how to avoid false positive detection, and the ethical workflow Academic Integrity.

Today in 2025, the university has moved from a posture of “prohibition” to a posture of “thoughtful integration.” There is no longer a universal ban on digital tools. Instead, we have a more nuanced conversation about where the human mind ends and the algorithm starts.

And the numbers are there to prove it. The 2025 Student Generative AI Survey by HEPI reports roughly 92% of university students are using AI tools in their studies on a regular basis. The 2025 Quizlet survey reports 89% of students use AI for school. But the pervasiveness of generative AI has made false positives a new source of anxiety.

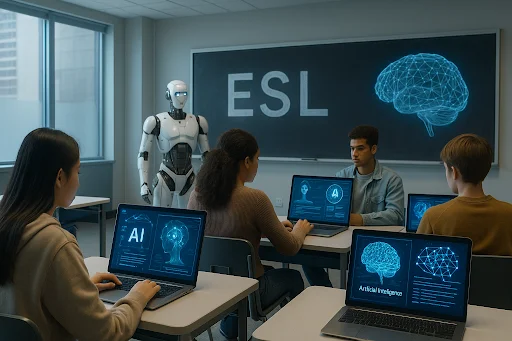

For students, especially with English as a Second Language (ESL), the line between “cheating” by generating text and “polishing” by correcting the grammar is the most important academic line. And this guide shows how you’d better learn the university’s policies and how to ethically incorporate AI tools to sharpen rather than replace your intellect.

Continue reading with a related post designed to strengthen your overall insight.

The Spectrum of University Policies in 2025

It’s no longer the case that all universities have a blanket “No AI” policy.In 2025, there are a few distinct categories of universities based on how they handle AI usage. Once you can identify which category your university lies in, the rest of the puzzle starts to fall into place.

●The “Permitted with Citation” Model (e.g., Cornell, UC Berkeley):

Many top-tier US universities have adopted the mentality of using AI as a consultant. For instance, Cornell University’s academic integrity policy now explicitly lists “grammar and spell-checking” as a permissible use, as long as the ideas in the text aren’t generated by the algorithm. UC Berkeley generally follows a “professor-discretion” model, in which “grammar” and “brainstorming” are usually allowed as long as the instructor explicitly allows it. The thing you still have to do is cite the tool as you would a Google link or a reference book.

●The “Strictly Formative” Model (e.g., Oxford, Cambridge):

At other schools, there’s a hard divide between learning and handing in. In this world, AI is good for explaining a concept to you or doing a formative assessment to check your understanding, but polishing a final written product for submission—unless you declare it—might be considered misconduct.

●The “No Tolerance” Departments:

Even if a university is AI-friendly, within that university there can be a department that is zero-tolerance for using an algorithm. Generally, the departments that are zero-tolerance for anything algorithmic fall under Humanities and Creative Writing. In these courses, even using basic AI to check for grammar and spelling might trigger a citation review.

The “Robotic” Trap for ESL Students

A major ethical concern in 2025 is the bias inherent in AI detection software. Algorithms are trained to flag text that has low “perplexity”—writing that is highly predictable, structurally rigid, and grammatically perfect.

This creates a paradox for non-native English speakers. When an ESL student writes an essay, they often adhere strictly to textbook grammar rules and avoid complex idioms to stay safe. To a detector, this “perfect but rigid” writing looks identical to machine-generated text.

This puts students in a difficult position: if they use standard tools like Grammarly or ChatGPT to fix their errors, the text becomes even more “sterile” and likely to trigger a false positive. If they don’t, they risk lower grades due to language barriers. This is not about cheating; it is about the struggle to be understood without being falsely accused by a machine.

The Ethical Workflow: From Draft to Submission

To polish your language without losing your academic voice (or triggering a false alarm), you need a workflow that prioritizes human oversight. The goal is to correct errors while maintaining the “burstiness”—the natural variation in sentence length and structure—that defines human writing.

Phase 1: The Human Draft

Always start with a blank page. The logic, the arguments, and the evidence must come from your own mind. If you use AI to generate the first draft, you have already crossed the line of academic integrity.

Phase 2: The “Sterile” Polish

You might use a tool to fix subject-verb agreement or awkward phrasing. At this stage, your text becomes cleaner, but it also becomes “flatter.” Standard AI editors tend to homogenize your writing, stripping away the unique quirks that make it sound like you. This is the danger zone for detection.

Phase 3: Restoring Natural Variation (Humanizing)

This is the most overlooked step. After polishing your grammar, your text may read too robotically. This is where specialized tools like GPTHumanizer fit into the workflow. Unlike standard editors that simplify text, a humanizer is designed to reintroduce the complexity and sentence variance that standard grammar checkers often remove.

By processing your polished draft through a ChatGPT humanizer, you aren’t changing the meaning of your work; you are adjusting the syntax to ensure it retains a human cadence. This step helps prevent the “sterilization” effect that leads to false accusations, ensuring your submission is both grammatically correct and naturally phrased.

Phase 4: The Final Read-Through

Never submit the output of any tool blindly. You must read the final version to ensure the tone matches your personal voice. If a word feels out of place, change it back. You are the author; the technology is merely the copyeditor.

Conclusion

In 2025, academic integrity is owned. You took a degree because you could think for yourself. In the process of writing, if technology helps you present your ideas clearly, it is the natural evolution of the writing process. Knowing your university’s policies, and knowing your tools, will allow you to thrive in the digital age.

FAQ: AI and Academic Integrity

Could I get in trouble for using AI to check my grammar?

Depends on your university, but in most cases, you can use an AI just to check your grammar and spelling. This is the same as using a regular spell checker. If the AI actually wrote the entire paragraph and/or changed your argument, then it is plagiarism. Check your syllabus first.

What’s the difference between AI generating and AI humanizing?

Generating creates new content from scratch (e.g., asking an AI to write an essay). AI Humanizer takes existing content and adjusts the sentence structure and tone to make it sound more natural and less robotic. The latter focuses on style and delivery rather than content creation.

Why does my original writing get flagged by AI detectors?

AI Detection is looking for predictable sentence structure. If you are not a native speaker of the language in which you are writing, you might write using very textbook English and the detector will think this is AI produced. Over-editing your writing with standard grammar tools and style guides will also eliminate some of the human variation and cause many false positives.

Do I have to cite AI if I only used it for the copyediting?

Yes. In 2025, it’s safest to say yes. All the major academic styles (APA, MLA, Chicago) have some guidance for citing AI. A short acknowledgement statement (e.g. “I used AI tools for copyediting and linguistic refinement”) shields you from accusations of cheating.

Explore more knowledge tailored to boost clarity and elevate your learning path.